I come to you today, admitting a deep problem. I can't help but waste money training language models. I don't even do anything with them. I don't publish them anywhere. I don't run evals. I just love watching the logs. That being said, I still want to do it all as cheaply as possible. I have a $5,000 credit to AWS from incorporating an LLC with Firstbase.io (not sponsored). If I want to watch more log lines, I need to make that money stretch as far as I can, until I can convince someone to give me money with the expectation that I do absolutely nothing with it.

I tweeted the other day asking people if they’d be interested in learning how I’m fine tuning models for $0.03 per million tokens, and at a whopping 7 likes, it’s my most liked tweet of all time. So here I am.

This isn’t a tutorial, so don’t expect a step by step break down to do everything that I did. Although, if you’d be interested in that, let me know! I’ve been thinking about trying to do an online course or video cast or something walking you through actually setting up a Kubernetes cluster for this. For now, I’ll keep it high level with all of the infra and underlying pieces that I’m actually using.

How do I get my GPUs?

Let’s start with the actual infrastructure that’s being used then, shall we? I’m still running my very same AWS Elastic Kubernetes cluster that I’ve talked about before. But since that post, some things have stayed the same and some things have changed. I use Karpenter.sh as my cluster auto scaler, which will provision nodes for me. With that installed to the cluster, I use the following EC2NodeClass and NodePool definitions below.

Note: My EKS cluster is named “red” and my GPU node group is named “green”.

apiVersion: karpenter.k8s.aws/v1beta1

kind: EC2NodeClass

metadata:

name: green-gpu

spec:

amiFamily: AL2

blockDeviceMappings:

- deviceName: /dev/xvda

ebs:

encrypted: true

volumeSize: 100Gi

volumeType: gp3

role: green-eks-node-group-20230615015942833200000001

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: red

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: red

tags:

karpenter.sh/discovery: red

---

apiVersion: karpenter.sh/v1beta1

kind: NodePool

metadata:

name: green-gpu

spec:

disruption:

consolidationPolicy: WhenUnderutilized

expireAfter: 1h

limits:

cpu: 100

memory: 200Gi

nvidia.com/gpu: 4

template:

spec:

nodeClassRef:

name: green-gpu

requirements:

- key: karpenter.sh/capacity-type

operator: In

values:

- spot

- key: kubernetes.io/arch

operator: In

values:

- amd64

- key: karpenter.k8s.aws/instance-category

operator: In

values:

- g

- key: karpenter.k8s.aws/instance-generation

operator: Gt

values:

- "5"

- key: karpenter.k8s.aws/instance-gpu-count

operator: In

values:

- "1"

- key: karpenter.k8s.aws/instance-cpu

operator: Lt

values:

- "64"

- key: kubernetes.io/os

operator: In

values:

- linux

taints:

- effect: NoSchedule

key: nvidia.com/gpu

value: "true"Reminder, let me know if you’d be interested in an end to end tutorial for setting up a cluster. But for now, let me just link to the Karpenter getting started docs. The node requirement selectors are very deliberate. I’m requesting g6 family nodes as evidenced by the “instance-generation is greater” than 5 requirement which brings me Nvidia L4 GPUs. These GPUs have 24GB of GPU memory and are typically marketed more for inference, but for this broke boy, we’ll just have to make do. I choose to only provision nodes that are spot lifecycle nodes which helps with the pricing, but I’ll break that down more in a later section.

What code do I run to train the models?

Selecting for L4 GPUs is very intentional, as L4 GPUs are of the Ada generation architecture. This ties in to the actual software stack that I’m using. Back in December, a good friend of mine messaged me about some new project that got posted to /r/LocalLlama. That project was called unsloth, which made extraordinary claims for improving performance of training Llama2 models. “80% faster and 50% less memory” was pretty big. To be perfectly honest, I haven’t personally measured the difference between using unsloth and not using it, but I appreciate the tenacity. Since then, I’ve had a few conversations with the technical mind behind it, @danielhanchen, and I must say, he’s a very friendly and very brilliant guy. It’s amazing what a cold email can do!

My code is based heavily on Unsloth’s own Google Colab example. Here’s my fine tuning code verbatim.

from datasets import load_dataset

from datetime import datetime

from transformers import TrainingArguments, TrainerState

from transformers.trainer_utils import get_last_checkpoint

from trl import SFTTrainer

from unsloth import FastLanguageModel

import os

import shutil

import torch

max_seq_length = 8192

model, tokenizer = FastLanguageModel.from_pretrained(

model_name="unsloth/llama-3-8b-bnb-4bit",

max_seq_length=max_seq_length,

dtype=None,

load_in_4bit=True,

)

model = FastLanguageModel.get_peft_model(

model,

r=16,

target_modules=[

"q_proj",

"k_proj",

"v_proj",

"o_proj",

"gate_proj",

"up_proj",

"down_proj",

],

lora_alpha=16,

lora_dropout=0,

bias="none",

use_gradient_checkpointing="unsloth",

random_state=1337,

use_rslora=False,

loftq_config=None,

)

alpaca_prompt = """Below is an instruction that describes a task, paired with an input that provides further context. Write a response that appropriately completes the request.

### Instruction:

{}

### Input:

{}

### Response:

{}"""

EOS_TOKEN = tokenizer.eos_token

def formatting_prompts_func(examples):

instructions = examples["instruction"]

inputs = examples["input"]

outputs = examples["output"]

texts = []

for instruction, input, output in zip(instructions, inputs, outputs):

text = alpaca_prompt.format(instruction, input, output) + EOS_TOKEN

texts.append(text)

return {

"text": texts,

}

dataset = load_dataset("yahma/alpaca-cleaned", split="train")

dataset = dataset.map(

formatting_prompts_func,

batched=True,

)

trainer = SFTTrainer(

model=model,

tokenizer=tokenizer,

train_dataset=dataset,

dataset_text_field="text",

max_seq_length=max_seq_length,

dataset_num_proc=2,

packing=False,

args=TrainingArguments(

per_device_train_batch_size=4,

gradient_accumulation_steps=4,

include_num_input_tokens_seen=True,

warmup_steps=5,

num_train_epochs=1,

save_steps=50,

save_total_limit=3,

learning_rate=2e-4,

fp16=not torch.cuda.is_bf16_supported(),

bf16=torch.cuda.is_bf16_supported(),

logging_steps=5,

optim="adamw_8bit",

weight_decay=0.01,

lr_scheduler_type="linear",

seed=1337,

output_dir="/mnt/outputs",

),

)

def get_checkpoints(checkpoint_dir):

checkpoints = [

os.path.join(checkpoint_dir, d)

for d in os.listdir(checkpoint_dir)

if os.path.isdir(os.path.join(checkpoint_dir, d))

]

checkpoints.sort(reverse=True, key=lambda x: int(x.split("-")[-1]))

return checkpoints

def safe_resume_training(trainer, checkpoint_dir):

checkpoints = get_checkpoints(checkpoint_dir)

for checkpoint in checkpoints:

try:

trainer_state_file = os.path.join(checkpoint, "trainer_state.json")

TrainerState.load_from_json(trainer_state_file)

print(f"Resuming training from {checkpoint}")

trainer.train(resume_from_checkpoint=checkpoint)

return

except FileNotFoundError as e:

print(f"Error: {e}")

print(f"The checkpoint {checkpoint} is incomplete and will be skipped.")

shutil.rmtree(checkpoint, ignore_errors=True)

print("No valid checkpoint found. Starting training from scratch.")

trainer.train()

start_time = datetime.now()

safe_resume_training(trainer, "/mnt/outputs")

print("Training took: ", datetime.now() - start_time)

trainer.save_state()

model.save_pretrained("/mnt/llama3_lora_model")I had ChatGPT generate the code for cleaning up faulty checkpoints. Since I’m running on spot nodes, I occasionally get nodes taken away from me that don’t get to fully checkpoint, so this is my solution for robustness.

Notice that I’m using the Unsloth quantized version of the 8b. The code sample is training using an Alpaca dataset and an Alpaca instruction format. In terms of training cheaply, I don’t think that will make a difference though. People can take my recipe and do other data with it.

What do I use for file storage?

Amazon S3 isn’t a file system, it’s an object store. Which makes it the perfect file system for me to use for storing the models. I have the Mountpoint for Amazon S3 CSI driver installed on my EKS cluster, which lets me configure an S3 bucket as a PVC. I haven’t benchmarked it for I/O throughput but for the model sizes and data set sizes that I’ve been using, it’s been great so far.

So how much does it cost?

Let’s break down the costs of this setup. I’m not going to factor in the cost of the EKS cluster itself or any CPU nodes you need. I’m only going to focus on the cost of the actual GPUs. The node definitions that I shared earlier in this post are selecting only g6 instances. I allow for selecting to larger instance types just to deal with the availability of the instances themselves. Even though both a g6.xlarge up to the g6.8xlarge only have a single L4 GPU on them, having the different amounts of CPU and memory can make them more or less appetizing to other customers. Since the spot lifecycle type is always going to be excess compute capacity, I’ll take whatever I can get when I’m playing with my infrastructure.

At the g6.8xlarge upper end of the spectrum, that historically caps out at an hourly cost of about $0.22 per hour in the us-west-2 region.

When there are g6.xlarge instances available, the prices on spot instances is a whopping $0.085 an hour. That’s less than 9 cents per hour for a 24GB GPU!

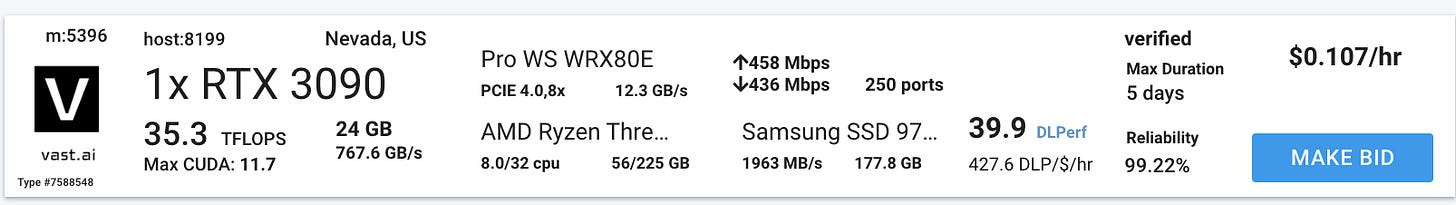

Even the absolute very cheapest instance on Vast.ai is still $0.10 per hour.

And how do I get the $0.03 number that I click baited you with? Well, that’s about how much it costs to process over 1,000,000 tokens.

In my TrainingArguments, I set include_num_input_tokens_seen=True. I log every 5 steps, so I can see how long it takes to do 5 steps and how many tokens were processed over those 5 steps. Then I can extrapolate. It takes approximately 30 seconds to do 5 steps, which gets me just about ~24,512 tokens processed. That’s about ~817 tokens per second. Which comes out to about $0.02917 per 1,000,000 tokens.

Let me know if you’d be interested in tutorials for the rest of the infrastructure.

Thanks for reading.