Solving the 2 year old Traefik Labs job application challenge

I wonder when they'll do a newer one

The other night while browsing Twitter, I got hit with an ad from Traefik Labs about their “platform engineering” job opening. I’ve been using Traefik as my ingress controller since the very beginning of my experience with Kubernetes. It does everything that I want and need it to do, with the smallest amount of configuration. So, I clicked on the ad, curious about what the job description would look like.

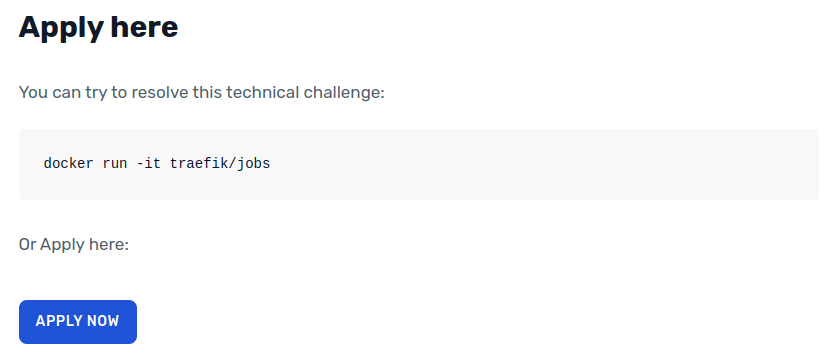

When you scroll to the bottom of the page, you’ll see the following vague challenge:

I was intrigued. I checked my calendar and made sure that there was nothing for me to do that following Saturday. I had no idea what was going to happen or what I would need to do, but I thought it would be really interesting to try. I didn’t know how long it was going to take, but I had the entire afternoon available to me so I figured I’d give it a shot.

Come Saturday afternoon, the game was afoot. I ran the docker command.

⇒ docker run -it traefik/jobs

Helmsman, where are you? 🤔Okay. So, this is all the container prints. Fortunately for me, I actually already knew what this clue meant. The word Kubernetes, my favorite and dear container orchestration platform, is actually Greek for the word “helmsman”. Instantly, I thought, I wonder if I’m supposed to run this container in a Kubernetes cluster? I went to go run this program in my personal EKS cluster.

⇒ kubectl run jobs --rm -it --restart=Never --image=traefik/jobs

It seems I do need more permissions... May I be promoted cluster-admin? 🙏

Hmmmm, it seems Helmsman deployment has an issue 😒

pod "jobs" deleted

pod default/jobs terminated (Error)Aha! Okay. So, I’m on to something here, but the logs are indicating needing more permissions. Unfortunately, there’s not really a great way for me to find out what the permissions needed are, so I was just going to give it the cluster-admin role so that I wouldn’t have to guess. That being said, it’s untrusted code, so I knew to instead create a different cluster to run this container in, so that I wouldn’t risk anything.

⇒ kind create clusterAnd now I needed to create a service account to assign the cluster-admin role too.

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: jobs

labels:

app: jobs

spec:

selector:

matchLabels:

app: jobs

template:

metadata:

labels:

app: jobs

spec:

serviceAccount: jobs

serviceAccountName: jobs

containers:

- name: jobs

image: traefik/jobs

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: jobs

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: jobs-cluster-admin

subjects:

- kind: ServiceAccount

name: jobs

namespace: default

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.ioAnd now if I created all of these resources in the cluster, now I’d get:

⇒ kubectl logs deployment/jobs

Look at me by the 8888 ingress 🚪Port 8888, eh? I added that port to the pod spec in my deployment and then I created the following Service and Ingress definition:

---

apiVersion: v1

kind: Service

metadata:

name: jobs

spec:

selector:

app: jobs

ports:

- protocol: TCP

port: 8888

targetPort: 8888

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: jobs

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: jobs

port:

number: 8888And this is where things actually started to get really weird. The logs were now showing:

⇒ kubectl logs deployment/jobs

It seems I do need more permissions... May I be promoted cluster-admin? 🙏

Look at me by the 8888 ingress 🚪For some reason when I was applying the entire set of manifests, I was getting this weird permission message. But then I’d get the log about ingress, and then the container would crash.

Look, at this point, I’d love to say that I had some kind of genius brain blast, but the reality is that I spent about 2 hours just bashing my face against the same problem over and over again. I was trying every possible permutation of doing RBAC in the cluster. I was creating new roles, I thought that maybe a cluster role wasn’t going to be correct for some reason? I was trying roles with empty strings, roles with wildcard values. I was even trying to modify the pod’s security context. I was making the container privileged, trying to run as different users. Nothing was working. And then suddenly, I had a really strange idea.

I went to go check on this image on DockerHub. I noticed that the image hadn’t been updated in over 2 years. I thought to myself, hmm, I wonder if there’s some kind of backwards incompatible change in the Kubernetes control plane API that’s causing some kind of bug here. The very next thing I did was to build a kind cluster using an older version of Kubernetes. Two years ago, Kubernetes v1.20 was an active version, so let’s go with that. So I made a kind cluster using the following configuration:

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

image: kindest/node:v1.20.15Lo and behold, after using an older version of Kubernetes, after re-building the kind cluster and deploying the container again, what do I see?

⇒ kubectl logs deployment/jobs

W0220 21:06:28.518812 1 warnings.go:67] extensions/v1beta1 Ingress is deprecated in v1.14+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

You have set up your cluster in good taste 😉

Now that you have set up an ingress... You should be able find me...Which tells me two things:

It looks like I was actually right about the API deprecation. I’m going to guess that any cluster with Kubernetes version 1.22 or later will not work for this challenge at all. What I’m not sure about is whether or not figuring this out was supposed to be part of the challenge, or if Traefik Labs just didn’t think about this edge case.

Looks like I should be able to send this application a request. The pod is no longer crashing after starting up.

So in one terminal I start a port-forward:

⇒ k port-forward svc/jobs 8888And in another terminal, I send a curl request:

⇒ curl http://localhost:8888/

Come on, use that damn ingress please 😬Clever. At this point, I guess I could go install an ingress controller into this cluster. It would be poetic to use the actual Traefik ingress controller, but I was too lazy. I just took a wild guess that the application was determining whether or not the requests were going through an ingress controller based on HTTP headers. For any kind of proxy, it’s really common to set a few headers all with the X-Forwarded-* prefix. With a little bit of testing, I was able to get the container to speak!

⇒ curl -H "X-Forwarded-Host: foo" http://localhost:8888/

<!DOCTYPE html>

<html lang="en">

<head>

</head>

<body>

<center>

I have to tell you something...</br>

Something that nobody should know.</br>

However, everyone could see it.</br>

It's even part of my public image.</br>

Come back when you know more.</br>

But remember, it's a secret 🤫</br>

</center>

</body>

</html>So now I’ve got this riddle in the form of an HTML page. Instead of trying to solve the riddle, it made me wonder if there was a way to find any kind of secret message that’s also in the form of an HTML page. So I wanted to take a look at the binary for the application.

One thing that I had noticed about this container is that the entire thing was about ~30MB in size. On top of that, just because I’ve had experience with traefik itself, I know that Traefik Labs likes to use scratch containers for their container base image. Triply, Traefik is a Go application, so my guess was that if I tried to get a shell to this container so that I could inspect the binary, that I wouldn’t have any luck. And sure enough:

⇒ docker run -it --entrypoint bash traefik/jobs

docker: Error response from daemon: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "bash": executable file not found in $PATH: unknown.

ERRO[0000] error waiting for container: context canceled

⇒ docker run -it --entrypoint ash traefik/jobs

docker: Error response from daemon: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "ash": executable file not found in $PATH: unknown.

ERRO[0000] error waiting for container: context canceled

⇒ docker run -it --entrypoint sh traefik/jobs

docker: Error response from daemon: failed to create shim task: OCI runtime create failed: runc create failed: unable to start container process: exec: "sh": executable file not found in $PATH: unknown.

ERRO[0000] error waiting for container: context canceledSo what happens next? Now we do a little snooping and get creative.

⇒ docker inspect traefik/jobs | jq '.[0].Config.Entrypoint'

[

"/start"

]All we see is the entrypoint is a file named start. And if we look at the layer details on DockerHub, we’ll notice that there’s a label for the container, and a single file is copied in before the entrypoint is set. Alright, let’s dance, Traefik Labs. I don’t have a way to run the container, so I can’t run a docker cp command to get the binary out, but you know what I can do? Multi-stage docker builds. I write the following Dockerfile:

FROM traefik/jobs

FROM alpine:3.14

COPY --from=0 /start /startSo I’m copying the binary from the first stage, which is the Traefik Labs container, into an alpine container, so that I have a shell, and I have utilities like strings which will let me do a bit of introspection.

⇒ docker build -t sliceofexperiments/jobs . && docker run --rm -it sliceofexperiments/jobs strings /start

... There's a lot of output ...I do a bit of digging with both strings and just by opening the binary raw, in vim. And I do actually find the entirety of that HTML document. Unfortunately, I don’t find anything else in the binary that would be useful.

What was the riddle again?

I have to tell you something...

Something that nobody should know.

However, everyone could see it.

It's even part of my public image.

Come back when you know more.

But remember, it's a secret 🤫The line here that stood out to me the most was talking about how the secret is “part of the public image”. And you know what, when I was looking at the layer details earlier, what did we see? That’s right, we saw that label value!

LABEL helmsman=dcc9c530767c102764d45d621fc92317But what do we do with the label? I spent hours trying to figure this out. I added this label to all the resources in the cluster thinking that maybe if I add the label to the Kubernetes manifests, that this would unlock something. If you run the start binary yourself and add —help to the flags, you’ll see a few flags that you can set, so I thought maybe I needed to set those arguments with this value. I was sending HTTP requests to the service where I was setting custom headers with these values. I was sending JSON bodies, I was sending query parameters, Authorization headers, bearer tokens and basic auth, etc. Nothing was working.

A few hours of trying everything, I wondered if I needed to take things a little more literally… What if I just made an actual secret in the cluster with these values?

⇒ kubectl create secret generic helmsman --from-literal=helmsman=dcc9c530767c102764d45d621fc92317And I restarted the pod one more time, setup the port-forward, and sent another request with curl and what did I see but victory!

⇒ curl -H "X-Forwarded-Host: foo" http://localhost:8888/

<!DOCTYPE html>

<html lang="en">

<head>

</head>

<body>

<iframe src="only-for-winners" width="100%" height="2000" frameborder="0" marginheight="0" marginwidth="0">Loading...</iframe>

</body>

</html>The application was scanning the cluster for secrets with this value!

In the <iframe> is a link to a Google Form. One that asks for your name, contact information, and your story for how you solved the challenge. I don’t want to spoil ALL of the fun for Traefik Labs. So if you want to see the actual Google Form url, you’ll at the very least need to go through the steps I did here to get the actual link.

That’s all I’ve got for this newsletter post. This time it was a bit less of an experiment but it was a fun little challenge. All in all, it took me about 7 hours total of face rolling my keyboard until I got this all figured out. Thanks for reading and please consider subscribing!